In today’s data-driven business landscape, enterprise analytics plays a crucial role in informed decision-making and maintaining a competitive edge. Microsoft’s Power BI service has emerged as a powerful tool for organizations seeking robust, scalable, and user-friendly analytics solutions. This blog will delve into some of the key features that make Power BI service an excellent choice for enterprise analytics, with a focus on accessibility, integration, and proactive insights.

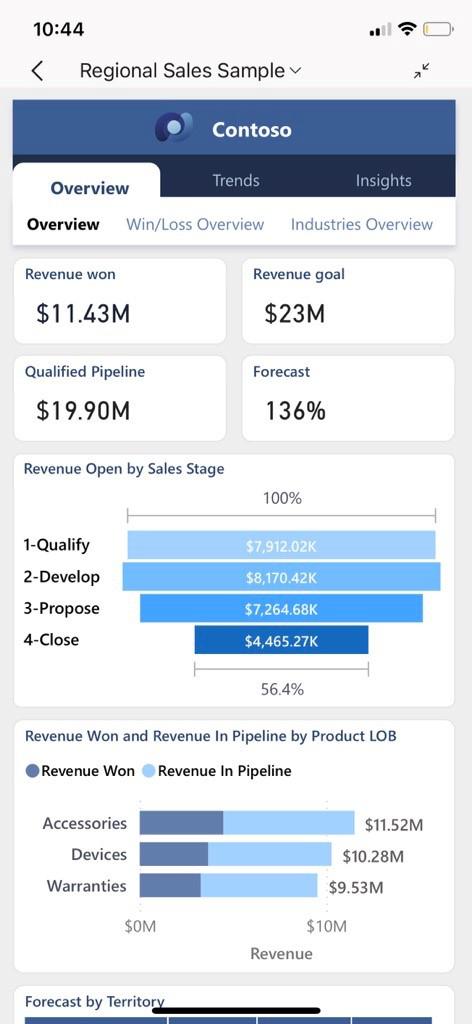

1. Mobile Access: Analytics on the Go

In an increasingly mobile world, the ability to access critical business insights anytime, anywhere is paramount. Power BI’s mobile app brings the full power of your analytics to your smartphone or tablet, enabling you to:

– View and interact with dashboards and reports

– Set up mobile-optimized views of your reports

– Annotate and share insights directly from your device

– Use natural language queries to get quick answers

To get started with the Power BI mobile app, simply download it from your device’s app store. Once installed, log in with your work email address to access your workspace and Power BI reports. This seamless integration ensures that you have the same secure access to your data on mobile as you do on your desktop, maintaining data governance and security protocols.

- Best Practice: Design your reports with mobile in mind. Use the “Phone Layout” feature in Power BI Desktop to create mobile-optimized versions of your dashboards and reports.

The following screenshot illustrates the Power BI mobile app, showcasing how reports and dashboards appear on a mobile device:

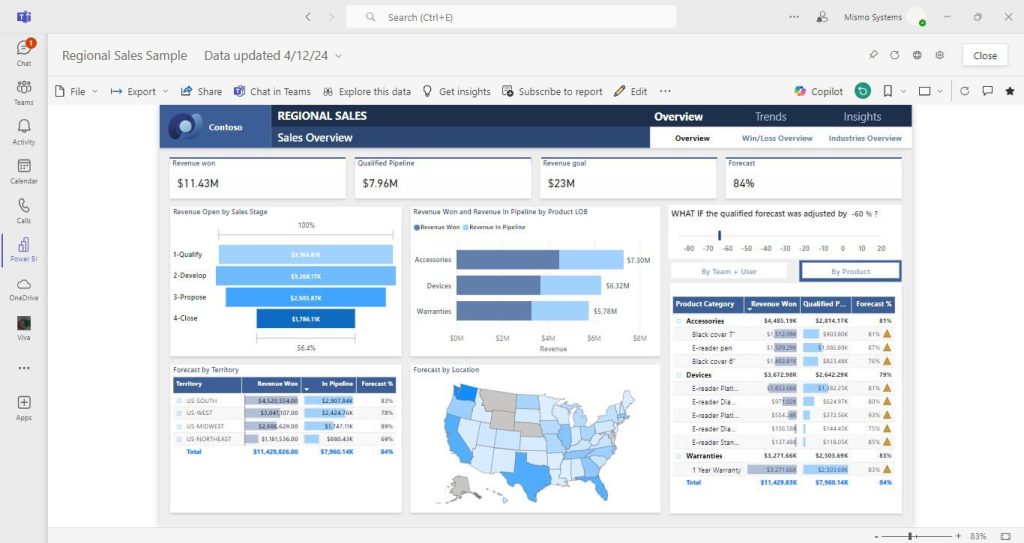

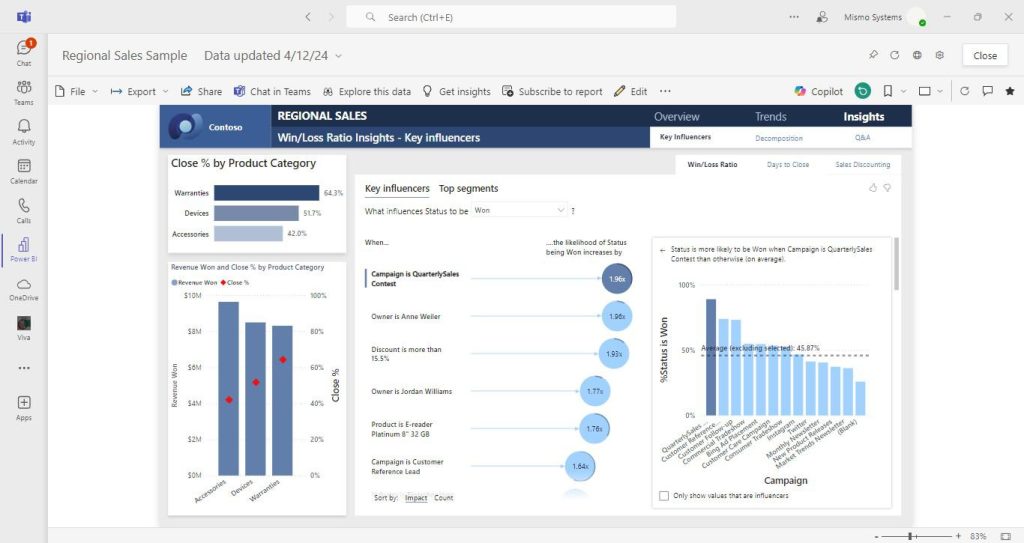

2. Seamless Integration with Microsoft Teams

As remote and hybrid work models become the norm, integration with collaboration tools is more important than ever. Power BI’s integration with Microsoft Teams allows you to:

– Embed interactive Power BI reports directly in Teams channels and chats

– Collaborate on data analysis in real-time with colleagues

– Share and discuss insights without leaving the Teams environment

– Set up data-driven alerts within Teams

- Best Practice: Use the Power BI tab in Teams to create a centralized location for your most important reports and dashboards, making it easy for team members to access critical data within their daily workflow.

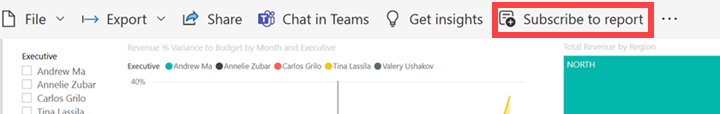

The screenshot below shows how a Power BI report can be viewed on a Microsoft Teams:

3. Automated Report Distribution with Subscriptions

In the high-stakes world of business, staying ahead means staying informed. But let’s face it: nobody dreams of waking up to a flood of reports. That’s where Power BI’s subscription feature comes in, turning information overload into actionable insights at a glance. Instead of drowning in data, decision-makers can now receive a concise snapshot of their most critical metrics right when they need it – whether that’s with their morning coffee or just before a crucial meeting. This smart approach to information sharing ensures that key stakeholders are always equipped with the latest data, without the need to dig through dashboards or lengthy reports. Power BI’s subscription feature allows you to:

– Schedule automatic delivery of reports and dashboards via email

– Set up different subscription schedules for various stakeholders

– Send snapshots or links to live reports

– Manage subscriptions centrally for better control and governance

- Best Practice: Use row-level security in combination with subscriptions to ensure that each recipient only receives the data they’re authorized to view.

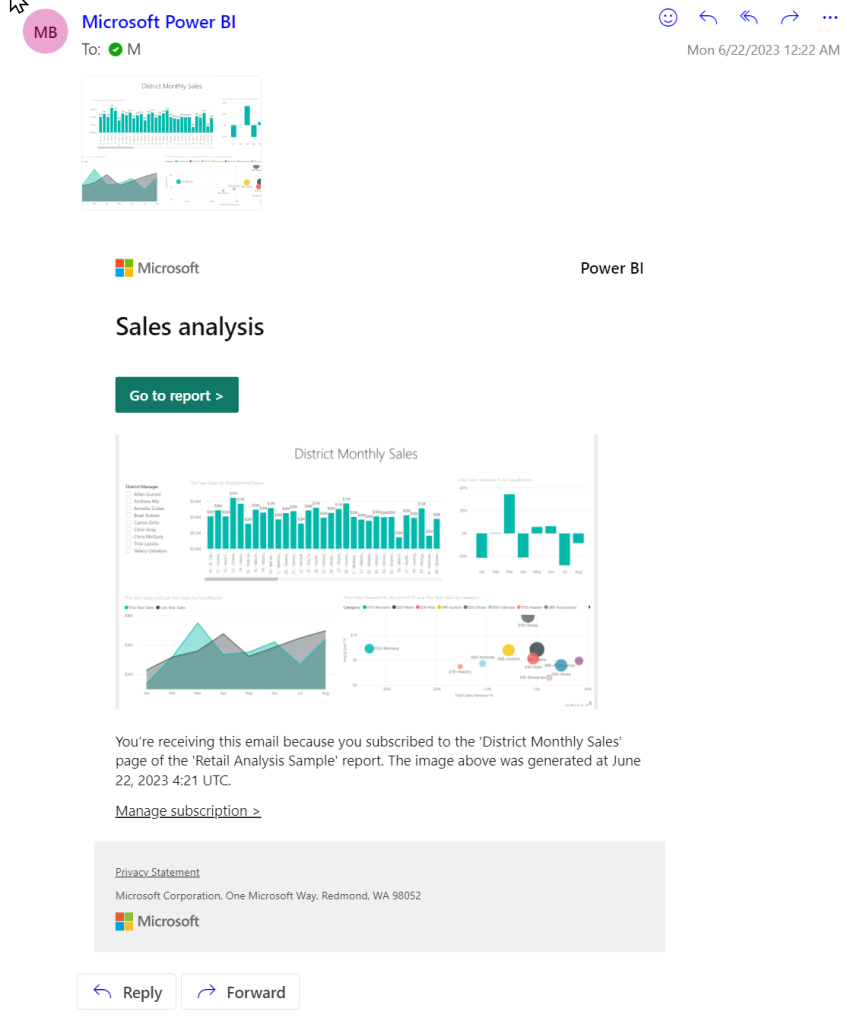

The following screenshot displays the interface for setting up a Power BI report subscription and how the subscription email come in your inbox.

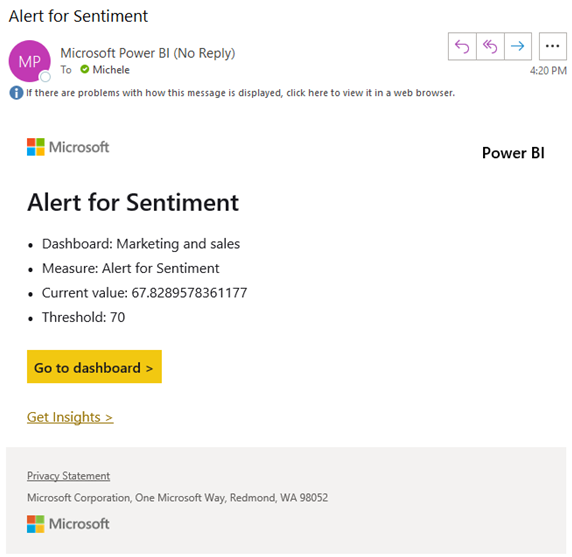

4. Proactive Insights with Data Alerts

To truly excel, businesses need proactive tools that offer real-time insights and early warnings. Power BI’s data alert feature is designed precisely for this purpose, helping you stay ahead of the curve by : automatically notifying you of critical changes and anomalies in your data, allowing you to address issues before they escalate, and making informed decisions with up-to-date information. Power BI’s data alert feature allows you to:

– Set up custom alerts based on specific metrics or KPIs

– Receive notifications when data changes meet your defined criteria

– Configure alert sensitivity to avoid notification fatigue

– Share alerts with team members for collaborative monitoring

- Best Practice: Start with a few critical metrics for alerts and gradually expand. This helps prevent alert overload and ensures that notifications remain meaningful and actionable.

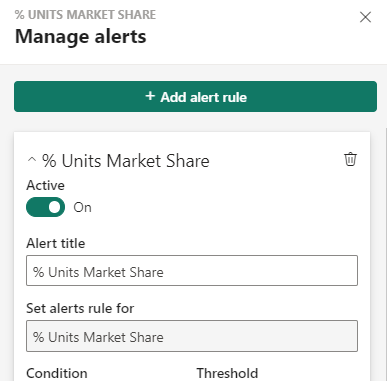

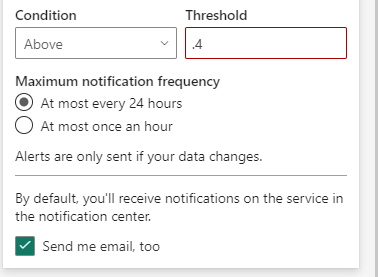

The screenshot below illustrates the process of creating a data-driven alert in Power BI:

Overview

Power BI service offers a comprehensive suite of features that cater to the complex needs of enterprise analytics. By leveraging mobile access, Teams integration, automated subscriptions, and proactive alerts, organizations can foster a data-driven culture that empowers employees at all levels to make informed decisions.

As you implement Power BI in your organization, remember that successful adoption goes beyond just the technology. Focus on user training, establish clear data governance policies, and continuously gather feedback to refine your analytics strategy.

By harnessing the full potential of Power BI service, your organization can transform raw data into actionable insights, driving innovation and maintaining a competitive edge in today’s fast-paced business landscape.