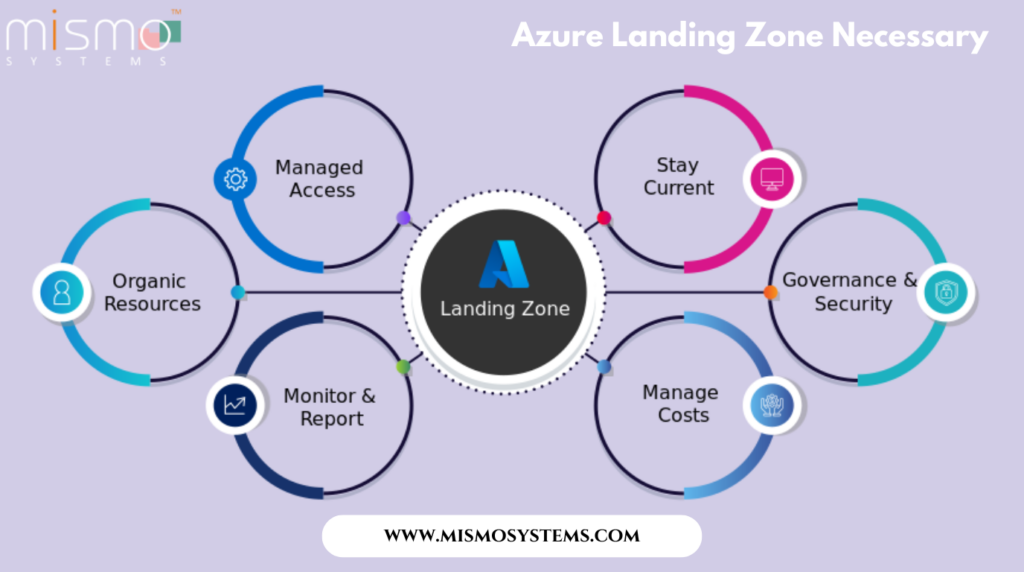

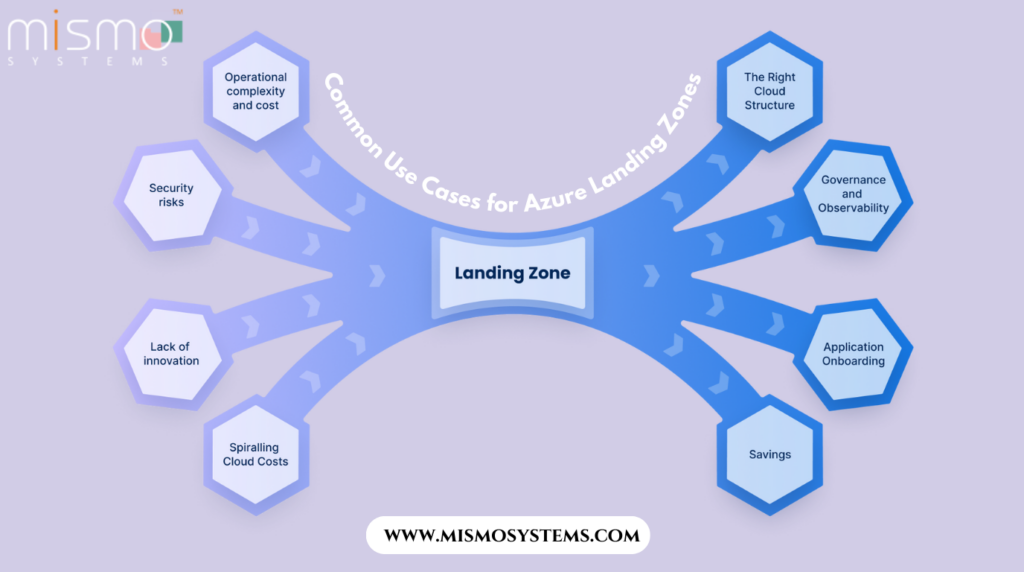

Want to build a secure, scalable cloud setup? Discover how Azure Landing Zones create a strong foundation for your cloud strategy.

For several years, VMware solutions have been at the forefront of the business world, trusted as the virtualization platform powering mission-critical workloads on-premises. IT teams have become well-versed in the intricacies of vSphere, vSAN, and NSX, while also making significant investments in developing robust VMware infrastructures

However, the pace of innovation continues to accelerate, and now it’s time to unlock the full potential of cloud possibilities. Azure presents a compelling choice for businesses looking to harness unmatched scalability, agile flexibility, and exceptional cost-effectiveness. This blog serves as your ultimate guide to understanding VMware migration to Azure—exploring why it’s worth pursuing, the steps for a smooth transition, and much more. Let’s dive in!

What is VMware migration to Azure?

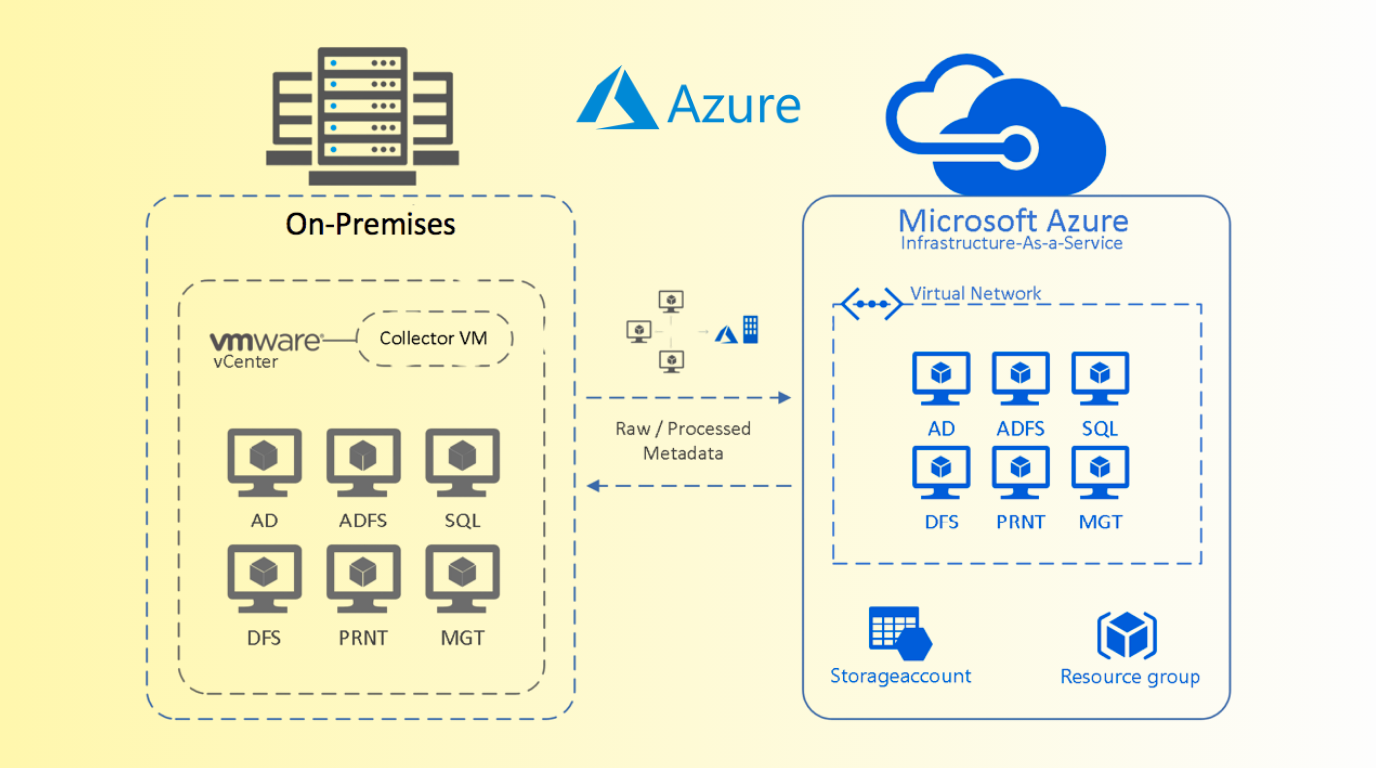

VMware migration to Azure refers to the process of transferring VMware virtual machines (VMs) from on-premises environments to the Microsoft Azure cloud platform. This allows organizations to take advantage of Azure's cloud capabilities without the need to recreate the VMs from the ground up. The main objectives of VMware migration to Azure include improving performance, enhancing cost efficiency, and gaining greater flexibility. Azure’s built-in features offer significant benefits for businesses by providing scalable infrastructure, reducing data footprint, and streamlining IT resource management. Additionally, migrating to Azure supports a range of key scenarios such as application modernization, disaster recovery, and executing data center exit strategies.

Did You Know?

- Free to Use: Azure Migrate is available at no additional charge. However, during the public preview, additional charges apply for dependency visualization under the Insight and Analytics offering. Once generally available, this feature will be free.

- Built-in Cost Calculation: Easily estimate and calculate the Total Cost of Ownership (TCO) for running your workloads in Azure IaaS.

- Powered by Azure Site Recovery & Database Migration Service Azure Migrate leverages these services to simplify migration to Azure Cloud, instead of just site-to-site replication for disaster recovery.

- Comprehensive Discovery & Assessment: Supports discovery and assessment of on-premises workloads, including Hyper-V, VMware, and physical servers, for seamless migration.

- Built-in Dependency Mapping: Provides high-confidence discovery of multi-tier applications, such as Microsoft SQL and Exchange, ensuring smooth migration.

- Intelligent Rightsizing: Recommends the optimal Azure VM SKU/machine size based on on-premises resource configurations for cost and performance efficiency.

- Pre-Migration Compatibility Reporting: Identifies and provides remediation guidelines for potential migration issues, saving time and effort.

- Database Migration Support: Integrates with Azure Database Management Service for database discovery and migration.

- PXE Boot Limitation: Azure does not support network-based booting (PXE). Machines relying on network streaming configurations cannot be migrated directly.

Why are businesses considering migrating from VMware to the cloud?

VMware has long been a trusted platform for businesses, allowing them to run multiple virtual machines (VMs) on a single physical server, each operating as an independent computer with its own OS and applications, all centrally managed. Despite the robust features VMware provides, transitioning to the cloud, particularly platforms like Azure, can significantly enhance a company's IT infrastructure.

Migrating to Azure brings numerous advantages, improving the overall efficiency of IT operations.

Scalability: Azure offers unmatched scalability, allowing businesses to adjust resources as needed to accommodate fluctuating demands while ensuring consistent performance.

Cost-effectiveness: With Azure’s pay-as-you-go model, organizations can optimize their IT expenditures, paying only for the resources they use and distributing costs efficiently across their infrastructure.

Microsoft Ecosystem: Azure’s integration with the broader Microsoft ecosystem is a key benefit. It ensures seamless compatibility with enterprise-grade solutions, improving productivity and simplifying workflows.

Security and Compliance: Microsoft Azure is renowned for its robust security offerings, including advanced threat protection, identity management, and encryption, which help safeguard applications and data. Additionally, Azure’s adherence to a wide array of global and industry-specific compliance standards ensures that businesses can meet regulatory requirements.

Global Network: Azure’s extensive global infrastructure provides high availability and low latency, with data centers located across more regions than any other cloud provider. This global network enables businesses to deploy applications closer to users, enhancing performance and improving user experiences.

The acquisition of VMware by Broadcom has led to a shift from VMware's traditional perpetual license model to a subscription-based approach, increasing costs for many organizations. The change in billing practices, along with limited purchase options for smaller businesses, has prompted companies to seek alternative solutions that are more financially attractive. Furthermore, the need for flexibility and dynamic scalability has driven the surge in VMware migration. Azure not only allows businesses to quickly adjust IT resources without incurring significant extra costs, but its flexibility stands in stark contrast to the more rigid VMware systems. This combination of cost efficiency and adaptability makes Azure an appealing cloud platform for businesses considering migration.

How to Migrate VMware to Azure?

There are several ways to migrate VMware workloads to Azure. Let’s explore the options.

Agentless Migration of VMware VMs to Azure

Migrating VMware VMs to Azure using an agentless approach is a simple and effective migration method that minimizes downtime. This method utilizes Azure Migrate, a centralized hub that helps assess, replicate, and migrate VMs to Azure. The key benefit of agentless migration is that it doesn't require any additional software installation on the VMs, streamlining the process and reducing the risk of compatibility issues. Through continuous replication, this approach ensures a seamless transition as the data is synchronized in real-time. After completing the migration, you can test the VMs in the Azure environment without impacting the production system.

Agent-based Migration of VMware VMs to Azure

The agent-based migration of VMware vSphere VMs to Azure involves installing migration agents directly on the VMs. This approach facilitates the migration process by providing more granular control and enabling advanced features, such as multi-tier application support and application consistency snapshots. Although installing agents adds complexity to the process, it offers significant advantages. It provides a high degree of customization, making it ideal for complex migration scenarios. Additionally, this method enables detailed monitoring and reporting throughout the migration, allowing enterprises to have a comprehensive view of the process. This empowers organizations to make informed decisions, ensuring a more seamless and efficient migration experience.

Migrating VMware VMs to Azure with Azure PowerShell (Agentless)

Azure PowerShell offers an agentless, script-based method for migrating VMware VMs to Azure. By automating assessment, replication, and cutover stages, it accelerates the migration process. This approach is particularly suited for enterprises with specific automation requirements or those aiming to integrate migration tasks into their existing deployment pipelines. Through PowerShell, businesses gain the flexibility to customize migration workflows, incorporate unique requirements, and ensure alignment with operational practices. This tailored approach provides a high degree of control, resulting in efficient and personalized migration experiences.

Migrating VMware Virtual Machines (VMs) using Azure VMware Solution (AVS)

Azure VMware Solution (AVS) allows seamless migration of VMware workloads to Azure by maintaining the native VMware architecture. This solution enables organizations to run their VMware workloads in the Azure environment without the need for application reengineering. By providing the same VMware technology stack—vSphere, vSAN, and NSX—on Azure, AVS simplifies the transition to the cloud. The migration process typically involves lifting and shifting on-premises VMware workloads to AVS with minimal modifications, ensuring operational continuity. Furthermore, AVS integrates Azure services, unlocking advanced capabilities such as enhanced scalability, native disaster recovery options, and robust security features. This integration streamlines the migration journey while optimizing the overall cloud infrastructure, allowing businesses to leverage Azure's vast ecosystem while retaining their familiar VMware management tools

Tools and Services for VMware Migration to Azure

Azure Migrate: The Core Tool

Azure Migrate is the primary tool for on-premises, including VMware to Azure migrations. This comprehensive service helps you plan, assess, and execute the migration seamlessly. It provides insights into your existing VMware environment, identifies potential compatibility issues, and recommends the best migration paths

Azure Site Recovery

Azure Site Recovery (ASR) can be used to replicate VMs from VMware to Azure. It ensures a continuous replication of workloads, making it a great option for disaster recovery as well as regular migrations.

VMware HCX

VMware HCX is a powerful tool for hybrid cloud environments, facilitating seamless migrations from on-premise VMware to VMware on Azure. It allows organizations to migrate large-scale workloads without any downtime.

Common Challenges in VMware to Azure Migration

Compatibility Issues

Sometimes, certain VMware configurations or features may not directly translate to Azure. It’s important to test and identify these issues before proceeding with the migration.

Network Configuration

Migrating VMs involves setting up networking in Azure to mirror your on-premise configurations. Challenges can arise in replicating complex network setups, such as VPNs or load balancers.

Downtime Management

Ensuring minimal downtime during migration is crucial. Plan for testing periods and utilize tools like Azure Site Recovery to keep services available during the process.

A Quick Guide on VMware Migration to Azure

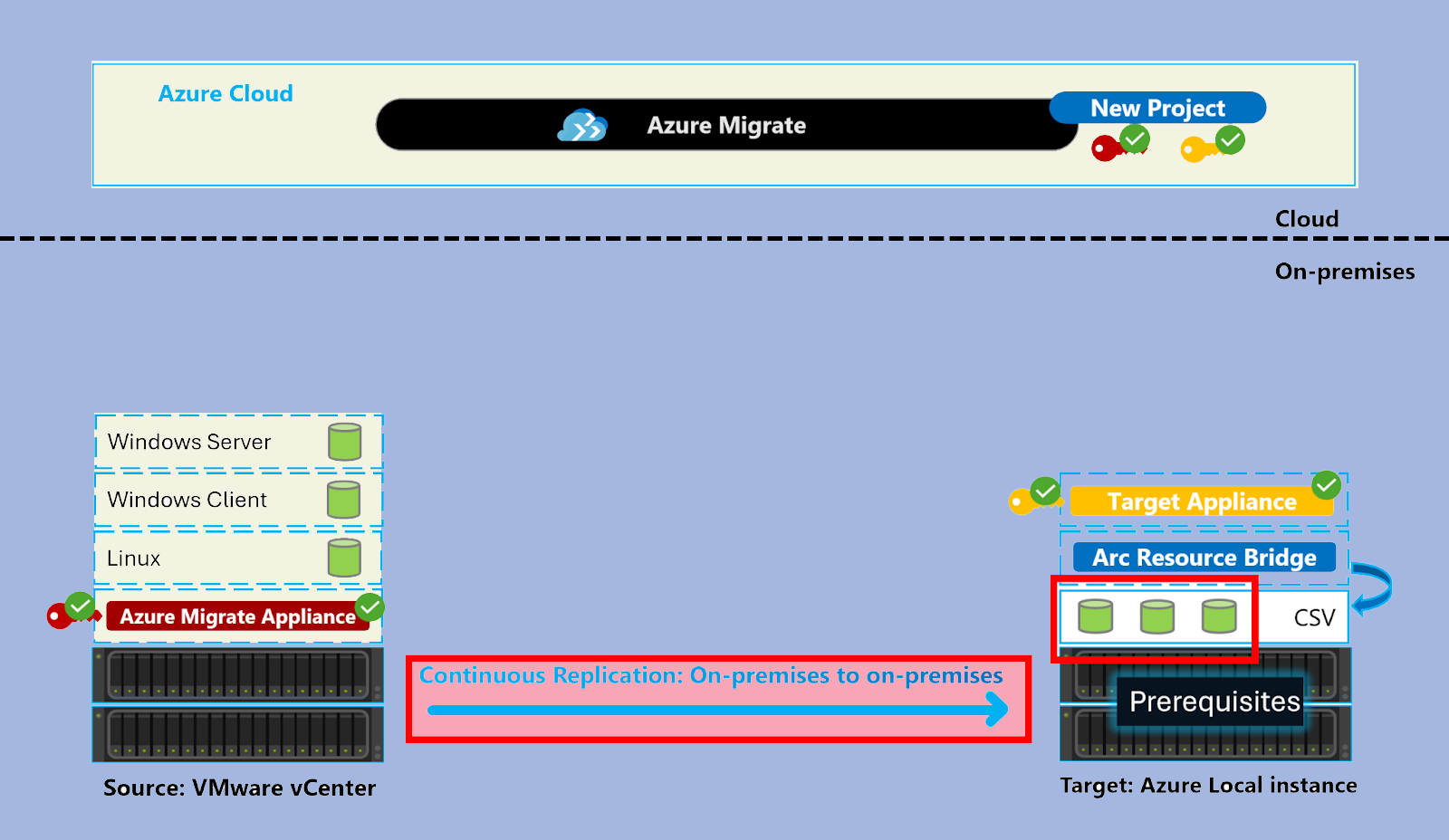

Opting for a migration tool like Azure Migrate streamlines the process of VMware VM migration to Azure, ensuring minimal downtime and data loss. It allows you to closely monitor the progress and statuses of each VM, ensuring a smooth transition to the Azure environment. The key steps in the migration process are discovery, assessments, and right-sizing on-prem resources for infrastructure, data, and applications. Below is a breakdown of the overall migration process:

1. Setting Up Azure Migrate:

You can set up Azure Migrate with a few simple steps via the Azure Portal. Once completed, create an Azure Migrate Project to begin the migration journey.

2. Assessment:

Next, add assessment tools to evaluate your server environment and ensure everything is aligned with Azure's requirements.

3. Discovery:

Install the Azure Migrate application from the portal within your VMware environment. The application will automatically discover your VMs, scan your VMware setup, gather essential information, and generate assessment reports. These reports will indicate whether the VMs are ready for migration and offer recommendations for the best Azure configurations.

4. Selecting the Right Migration Strategy:

Choosing the correct migration strategy is vital. If minimal changes are needed, the ‘lift and shift’ approach is the simplest and most effective. However, for optimizing applications or utilizing Azure's Platform-as-a-Service (PaaS) offerings, the ‘rehosting and refactoring’ strategy would be a better fit.

5. Performing the Migration:

Once the optimal strategy is chosen, you can proceed with the migration process. Azure Migrate allows you to replicate VMs from your VMware environment to Azure. This step also involves executing the cutover, syncing final data, and fully transitioning to the Azure environment.

6. Validate and Test:

Throughout the migration, it’s essential to perform rigorous validation and testing to ensure that the migrated VMs work as intended in Azure. This step is crucial to identify and resolve any potential issues before they impact production environments, ensuring a seamless transition.

7. Post-migration Activities:

After migration, enterprises should focus on optimizing their VMs within Azure. Post-migration activities involve continuous monitoring and management to ensure optimal performance. Azure’s management tools, like Azure Monitor, can help you track the health and performance of your resources, maximizing efficiency and simplifying operations in the new environment.

A trusted and dependable technology partner can make all the difference.

At Mismo Systems, we recognize the challenges businesses face when migrating to Azure. As a Microsoft Partner, we bring deep and comprehensive expertise in Microsoft and Azure solutions. If you're looking to migrate your VMware VMs to Azure, partnering with Mismo Systems ensures a smooth process, helping you avoid the complexities and achieve a successful migration. Our team of dedicated Azure experts will guide you through every step, ensuring a flawless transition to Azure.

Post-migration Best Practices

For Increased Resilience:

- Secure data by backing up Azure VMs using the Azure Backup service. Learn more.

- Maintain workload availability and continuity by replicating Azure VMs to a secondary region using Site Recovery. Learn more.

For Increased Performance:

- By default, data disks are created with host caching set to "None". Review and adjust disk caching settings based on workload requirements. Learn more.

For Increased Security:

- Restrict inbound traffic access using Microsoft Defender for Cloud's Just-in-Time administration.

- Govern and manage updates for both Windows and Linux machines via Azure Update Manager.

- Control network traffic to management endpoints using Network Security Groups.

- Implement Azure Disk Encryption to protect disks and prevent unauthorized data access.

- Learn more about securing IaaS resources and explore Microsoft Defender for Cloud. Read more.

For Monitoring and Management:

- Deploy Microsoft Cost Management to keep track of resource usage and monitor spending.